Geoffrey Hinton, the 75 years old British-Canadian cognitive psychologist and computer scientist famously known as the father of Artificial Intelligence (AI) has left Google by expressing fears that AI research is going in the wrong direction. This father of AI, who has done pioneering work in the field of artificial neural networks now feels that there is a danger to humanity from this technology.

In 2013, a startup DNN Research, founded by Geoffrey Hinton was acquired by Google. This was the time when Professor Geoffrey Hinton’s group at the University of Toronto had developed a system based on deep learning and convolutional neural networks, which had ended up winning the ImageNet computer vision competition by a huge margin. For the next ten years, Prof Hinton was dividing his time between his university research and his work at Google. Today, he has left Google because he wants to be free to speak out about the risks of AI.

In fact, this is not the first that any scientist has spoken about the ill effects of AI. In January 2015, late Stephen Hawking, Elon Musk, and many scientists working on AI signed an open call for research on the societal impacts of AI. They all argued that there is a possibility of a military AI arms race and efforts should be made by the United Nations (UN) to prevent the development of Lethal Autonomous Weapon Systems (LAWS, AI-based weapons) beyond meaningful human control. The letter mentioned that, unlike nuclear weapons, these weapons were not difficult to develop.

Now Prof Geoffrey Hinton has realised that big tech giants, in order to earn profits, are racing towards developing those AI-based technologies, which can cause serious harm to humanity. In the present context, the main issue is in regards to the products based on generative AI, the technology that powers popular chatbots like ChatGPT. However, Google is of the opinion that they are working towards responsible AI.

Today, everyone understands the strength of AI and its effective utility in various fields from education, climate analysis, medicine, and drug & vaccine research. Militaries are presently known to be working (in some cases already using) on AI systems mainly for an intelligence assessment, logistics or supply chain management, training and towards conducting simulations. Owing to the existing limitations of AI, defence agencies are possibly refraining towards majorly using them in actual combat roles. Some robotic machines and drones are already combat tested. The military is only a small user element of giant AI architecture, which is under development. There are major dangers from AI-based systems in the civilian domain.

The present worry is about the ChatGPT-like tools. They are coming with different types of dangers and could emerge in the near future, as an instrument for creating misinformation. Fake news is a phenomenon, which existed in the pre-ChatGPT period too. However, tools like ChatGPT, Chatsonic, OpenAI playground, Jasper Chat, Bing AI, and a few others come with various video, audio, image mixing and various other facilities that could help towards bringing in a ‘revolution’ in fake news. Already people have started using these tools for reacting mems etc. GPT-4 can help to make videos in minutes. Broadly, now it is possible to create misinformation in no time since easy ways of data tampering are available.

In March 2023, OpenAI’s CEO Sam Altman admitted in a television interview the dangers to society from the power and risks of language models. He had mentioned that the ability to automatically generate text, images, or code could be misused for launching disinformation campaigns or cyber-attacks. The easy availability of the software tools could be exploited by anybody. Interestingly, OpenAI is keeping technical details about its latest GPT-4 language model secret. It is important for the tech giants to be transparent. Obviously, the business interests are not allowing them to do so. This is why, there are fears in the minds of the people like Prof Geoffrey Hinton.

Historically, it has been observed that scientists at times have felt very uncomfortable with their own discoveries. On November 27, 1895, Alfred Nobel signed his last will mentioning a series of prizes for those whose works are of the greatest benefit to mankind. In 1867, Alfred Nobel invented dynamite, which gained relevance in warfare. He was called as a ‘Merchant of Death’ and possibly this made him realise how his invention was instrumental towards people getting killed in wars.

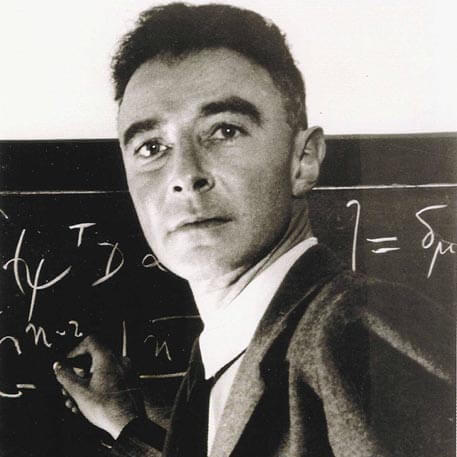

Physicist J. Robert Oppenheimer, the so-called father of the atomic bomb was devastated when his invention was put in use over Hiroshima and Nagasaki. He knew that there was blood on his hands and hence did try to evolve placing international controls on nuclear weapons. There are various such cases when the scientists themselves have expressed frustration about the bad impact of their work on society.

Today, particularly after the arrival of ChatGPT, there have been major concerns about the ill effects of AI. On March 22, 2023, an open letter was published by more than 1000 scientists about the need to pause giant AI experiments, for a minimum of six months. They had demanded the stopping of the training of AI systems more powerful than GPT-4.

Also Read: Madras HC grants injunction against Google in Matrimony case

This is mainly because there is no planning happening in regard to the ethical development of this technology. In recent months, it has been observed that various AI labs are locked in an unruly race to get into the market with very powerful tools, which possibly even their creators could find difficult to stop from getting rogue. Now the time has come to have a meaningful debate to find ways to stop the uncontrolled growth of AI.